Project Spring Fling

digital humanities for assessment @ auraria library

2. Modeling Student Responses

My first task for this assessment was topic modeling--a process in DH using a tool to learn a corpus and create a list of cohesive topics that appear. My tool of choice was MALLET, an open-source topic modeling application that you can run either through command line or through their graphic user interface (or GUI). In both of these instances, MALLET allows you to upload a directory of texts; from there, it learns the text and generates lists of words that form topics. The number of topics and number of words in each topic can be modified as well as other settings that affect MALLET’s performance. Output is delivered as .csv files to the directory of your choosing.

MALLET was easy to use once I got it running, but I had a few challenges in getting it to work properly. Initially, I wanted to run the application through the command line to flex and improve those skills. For some reason, MALLET never recognized my environment variable, and after several attempts to rectify this issue, I decided to go for the GUI instead. The GUI version of MALLET was simple to install and simple to run. But I encountered another problem when trying to model the student response transcriptions. Instead of a directory of texts, I had a single .txt file with all of the transcriptions. Handy and compact, but not useful at all for topic modeling. I then had to separate each of the responses into its own .txt file and save those as a single directory. After all of these were separated, MALLET easily learned the directory and generated topics.

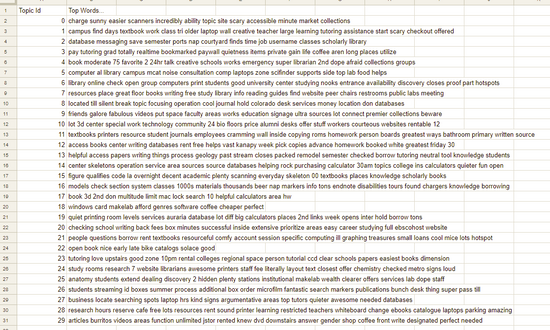

Once I knew MALLET could use my directory, I had to decide how many topics I wanted in my output. I started with 10, but the topics were disjointed. I then moved to 20--better results, but still not as coherent as I wanted. I also tried 30, but these topics were almost as bad as the first 10.

In the end, I decided to go with the middle 20. Some of these topics (such as 4 and 14) were more coherent than others. However, they still weren’t as strong as I would have liked for them to be.

Topic modeling is a great analytical method, but for this project, it proved less effective than I was anticipating. These student responses ranged from a single word to a phrase or two to a couple of sentences. I hypothesized that this variance in length of responses rendered topic modeling more ineffective. There were not enough words together to create strong, coherent topics. Realizing that this method did not give me the results I wanted, I decided to try a different approach.